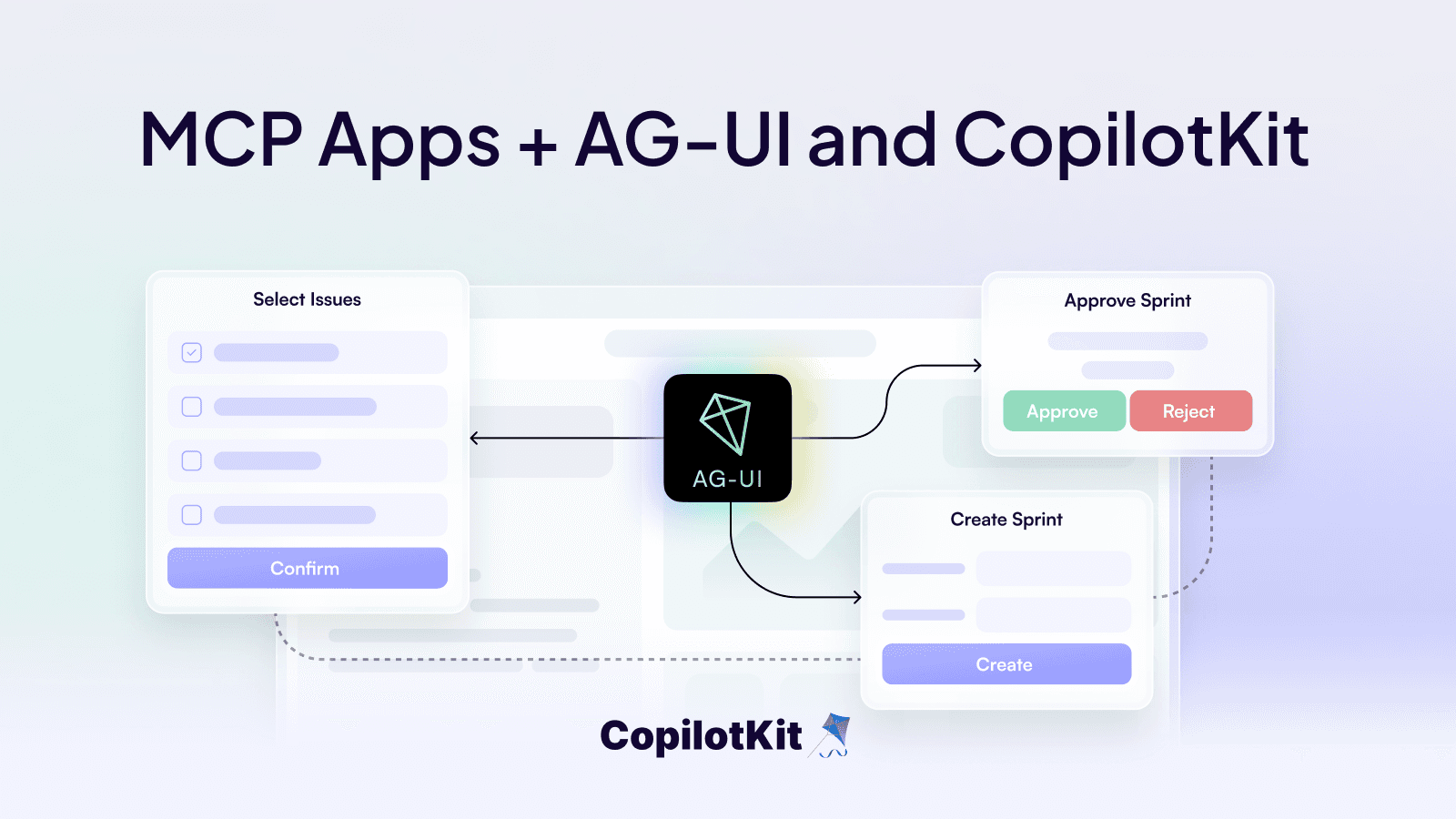

MCP Apps: Bring MCP Apps interaction to your users with CopilotKit!Bring MCP Apps to your users!

MCP Apps: Bring MCP Apps interaction to your users with CopilotKit!Bring MCP Apps to your users!

How HITL AI systems are bringing AI from demos to production.

"Make me money on the internet. Think step-by-step."

In the early days of GPT-4-level AI Agents, tongue-in-cheek prompts circulated, mostly for fun. No one really expected them to work, but it was worth a try—just in case.

When that didn't work, we shifted to a more focused prompt like: "you are an experienced lawyer, write a defense thesis for the given deposition, given the following case details.” This approach yielded far better and even some impressive results, but not at the level required to make these systems a real substitute for professional legal expertise.

Now, a year and a half into the “agentic revolution” - the key lesson we’ve learned is that LLM intelligence is most effective when it works alongside human intelligence - in the build phase as well as the run phase.

Let’s dig into what this looks like in engineering practice, some theory behind the approach and specific examples when building copilots that leverage human-in-the-loop (what we call CoAgents)

Let’s start with the build phase: Buildtime human-in-the-loop is about applying human intelligence to lay out the agent's "cognitive architecture." This includes the sub-steps and sub-thoughts involved in completing a high-level task of a certain kind.

Experienced lawyers, for instance, implicitly or explicitly break the task of writing a defense thesis into a complex process with many sub-steps. For example they may scan the deposition looking for independent allegations, address each allegation separately, and finally combine the results into a single coherent defense thesis. Each allegation review can itself have its own complex process, e.g. researching for relevant precedent or scanning for an alibi.

When building an AI agent designed to aid lawyers in this task, a programmer lays out the (human) domain expert’s knowledge in code as a kind of “agentic standard operation procedure” (SOP) — encoding deep expertise about how the given task ought to be tackled.

The boundaries between the individual sub-tasks can stretch and bend depending on the complexity of the task - as well the capabilities of the underlying model.

But the critical point is that the cognitive architecture of the overall system is now an adjustable parameter which can be optimized as needed

To provide an example closer to the world of software engineering, consider an agent designed to answer a search query. Its cognitive architecture might look like this:

This mimics how an experienced researcher tackles complex questions, leveraging both broad knowledge and specific skills.

Early frameworks and platforms in the AI space, such as the OpenAI Assistant API, were built for a one-size-fits-all open-ended system - one lacking any opportunities for build-time HITL interventions. While convenient to deploy, such systems have proved limited, and yielding undifferentiated products.

Over the past year, advanced frameworks have emerged for efficiently and explicitly bringing build-time HITL into the agentic space by customizing the agent’s cognitive architecture. Most notably, LangGraph by LangChain has become a powerful tool in this space, enabling sophisticated and domain-specific cognitive architectures.

LangGraph Cloud takes the place of the OpenAI Assistant API - for cognitively-architected agents.

As a simple example, here's the cognitive architecture of an agent designed to perform rudimentary research on behalf of a user - as visualized in LangGraph Studio.

This visualization provides a simplified representation of the agent workflow as defined in LangGraph, demonstrating how different components of the cognitive architecture are assembled together.

Let’s get into what we mean when we say Runtime human-in-the-loop. Even with meticulous cognitive architecture design, achieving full autonomy remains challenging. We are learning time and again that it’s far, far easier to get an agent to perform 70%, 80%, even 90% and 95% of a given task — than to perform the task fully autonomously.

But here’s the crucial insight: agents needn't be perfect to be useful.

Runtime human-in-the-loop AI is about AI agents designed to work alongside people, rather than fully autonomously.

LangGraph, which we discussed earlier in the context of build-time HITL, also provides agentic-backend infrastructure for ****runtime HITL via checkpoints, pauses, streaming, and rewind/replay capabilities - which we will highlight in a moment. At some point these runtime HITL capabilities must interface with actual humans – typically, through applications.

This is where tools like CopilotKit🪁 come into the picture.

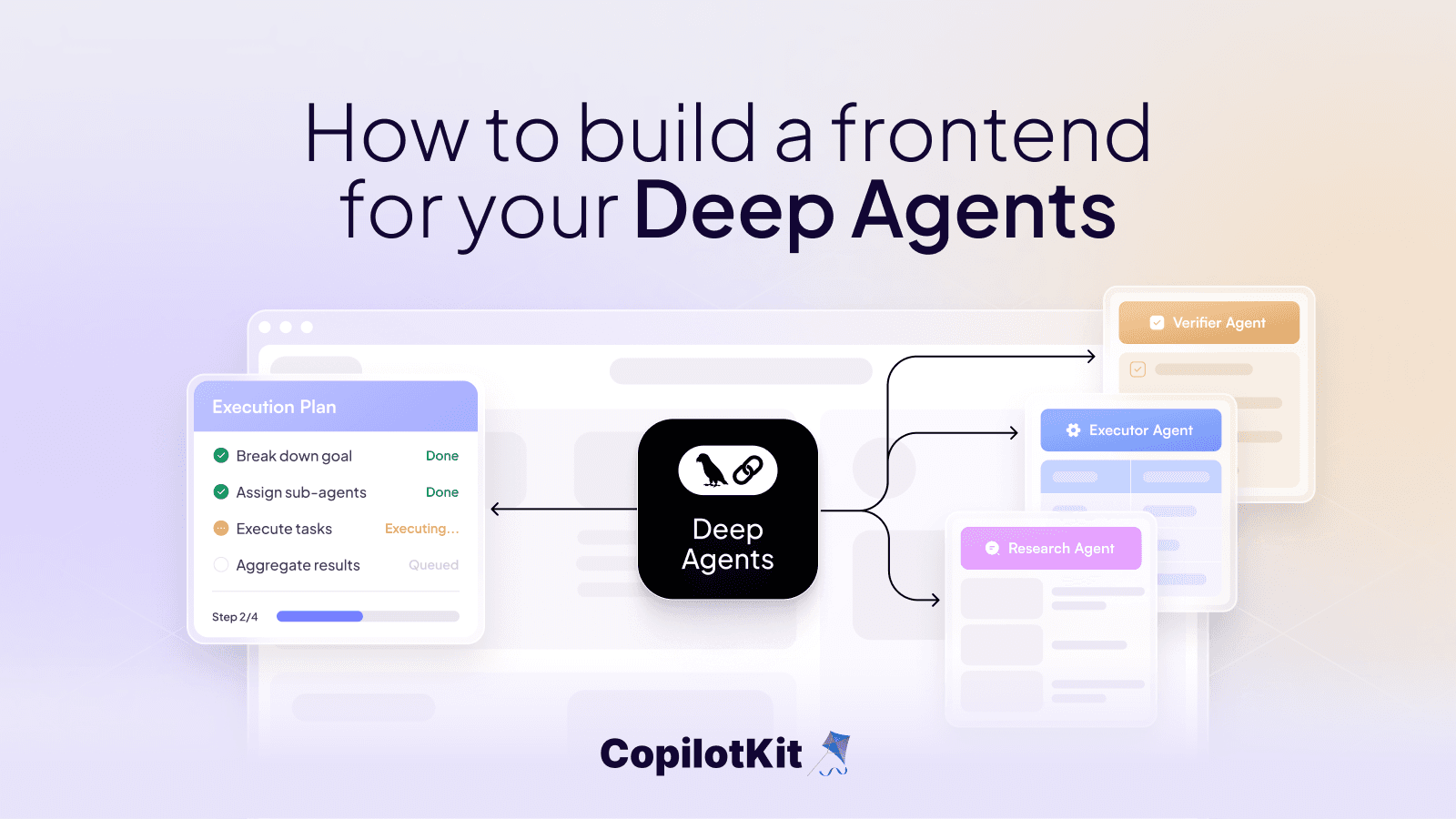

CopilotKit provides infrastructure for bringing runtime HITL to (react) applications —via a "specialized socket" purpose-built to facilitate app-agent interaction — as well as an (optional) built in UI.

We call these human-centric agents Co-Agents. Here’s how this shows up in your code:

const { state, setState } = useCoAgent({

name: "research_agent",

// optionally provide an initial state

initialState: {...},

handler

})We’ve identified 4 key ingredients in our initial CoAgent implementation that are focused on the highest critical state use cases:

Let’s review these one by one:

Agents can and often do take many seconds to execute - 30 seconds & longer is not uncommon with current technology! End-users can’t sit and stare at a loading indicator for such lengths of time - the experience seems broken.

By streaming the intermediate agent state to the application (even during the execution of a LangGraph node) - you can reflect the workings of the agent to the user with native application UX, and build production-grade experiences into user-facing products.

There are many cases in which you would want an agent and a human to collaborate over the same piece of state. CopilotKit’s CoAgents infra provides a mechanism for syncing agent state back and forth across the application or agent layers.

You often want to have the agents ask the end-user questions like “would you like to approve this plan of action?” or “prices are much lower the following week, would you like to explore delaying your vacation?”

This agentic Q&A can be over text-questions and text-answers (via the chat UI), as well as over “JSON-questions” and “JSON answers” using native in-app UX and structured data.

Suppose some agent makes a mistake in step 4 out of 9 in a given process. Anything following step 4, including the end result, would be useless. We would be forced to fully discard the agent’s work — including the 4 successful steps.

Agent steering allows an end-user to zoom in on step 4, correct it alongside the agent, and replay the agent’s operations from that point onwards. This capability is built on LangGraph’s checkpointer and time-traveling libraries.

The path to more advanced AI systems likely involves continuously refining both runtime and buildtime HITL processes. Andrej Karpathy's insight is particularly relevant here:

This suggests that HITL signals - particularly the nuanced, step-by-step thought processes of humans as they work alongside AI and even correct agent mistakes - may constitute some of the most crucial datasets for advancing transformer technology.

In my view, the ultimate goal of the AI revolution isn't to replace human consciousness, but to augment it. I'm hopeful that as we refine our HITL processes, we're moving towards an exciting future where AI becomes an extension of human consciousness - a powerful tool that amplifies our creativity, insight, and problem-solving abilities. This symbiosis could unlock new realms of human potential, allowing us to tackle challenges and explore ideas beyond what either AI or human minds could achieve alone.

Apply here to access our beta version of CoAgents.

Subscribe to our blog and get updates on CopilotKit in your inbox.