MCP Apps: Bring MCP Apps interaction to your users with CopilotKit!Bring MCP Apps to your users!

MCP Apps: Bring MCP Apps interaction to your users with CopilotKit!Bring MCP Apps to your users!

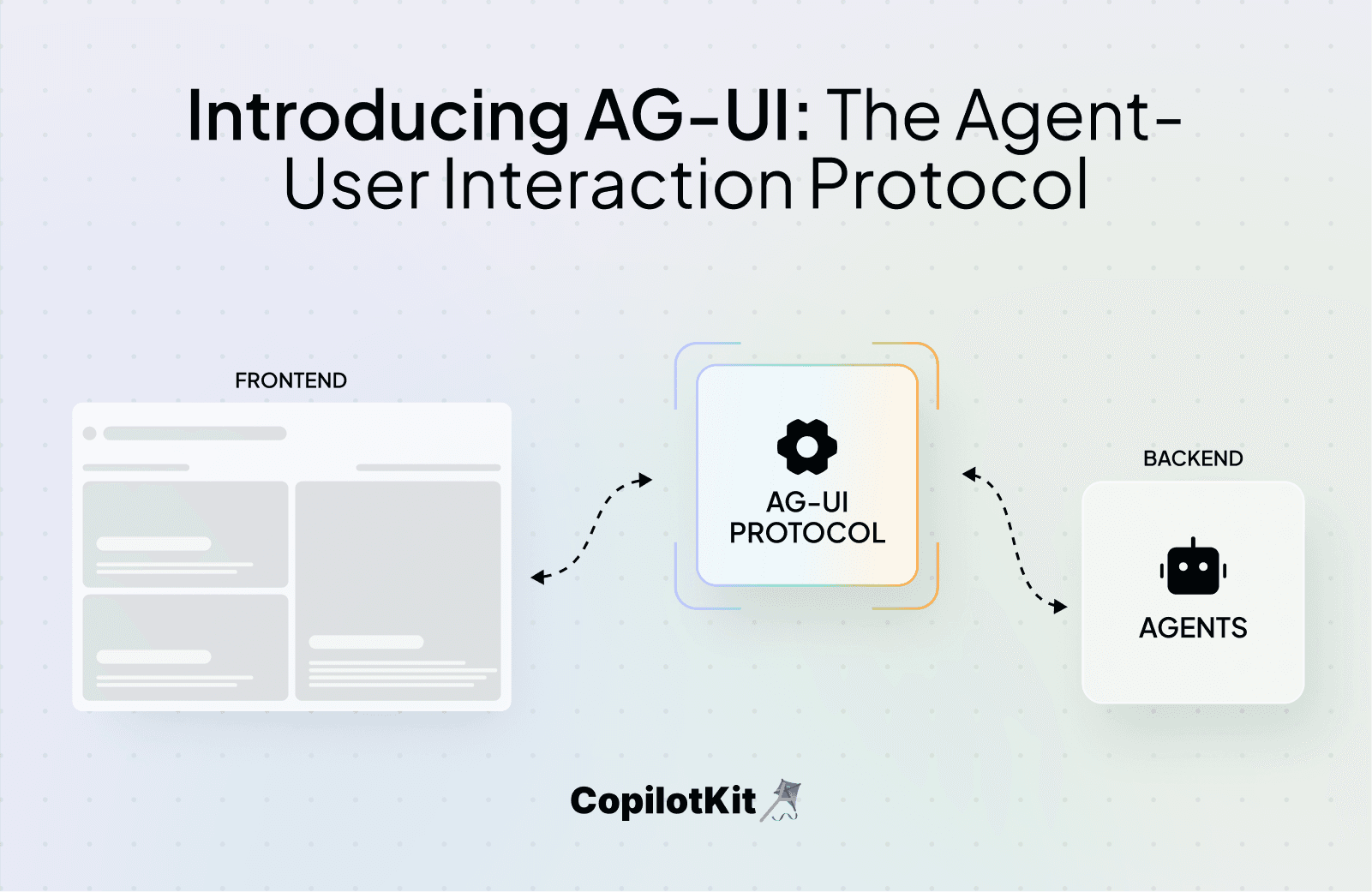

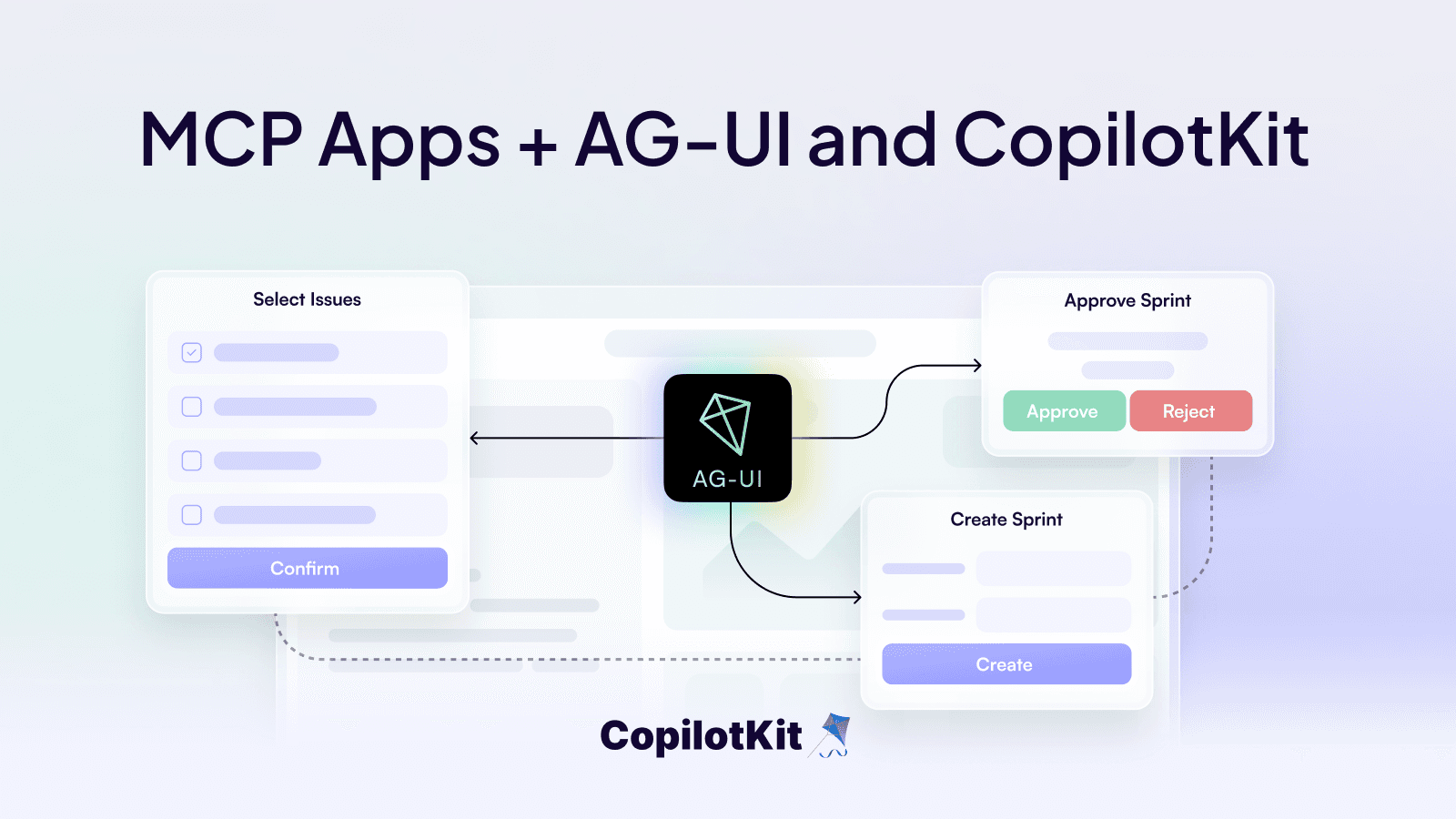

We're thrilled to announce AG-UI, the Agent-User Interaction Protocol, a streamlined bridge connecting AI agents to real-world applications.

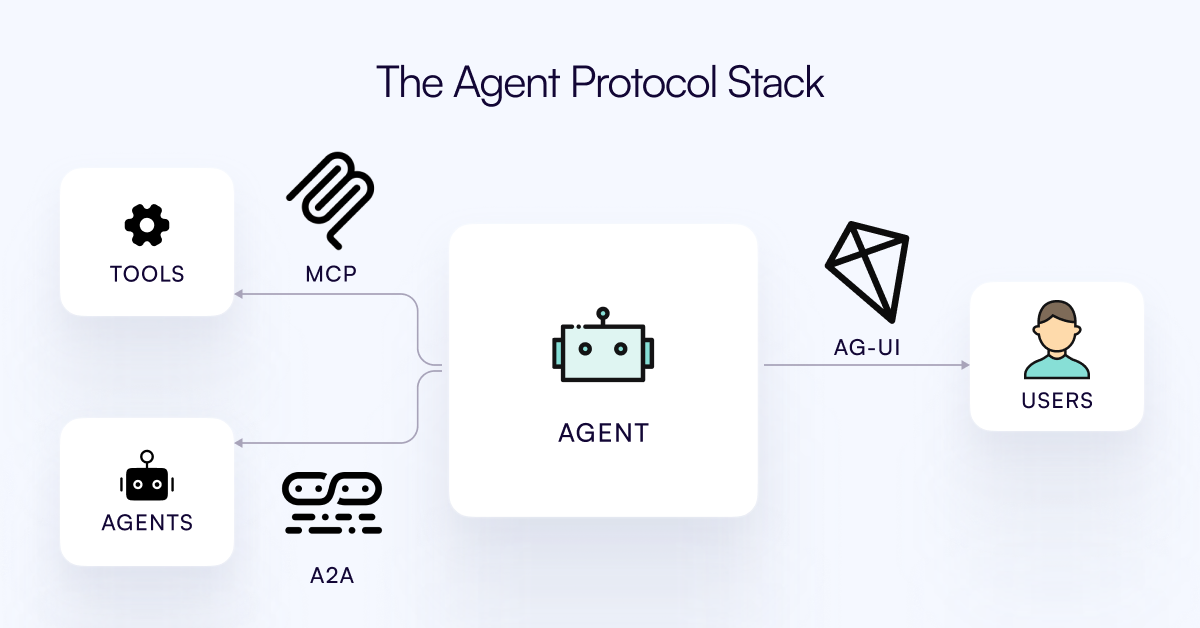

AG-UI is an open, lightweight protocol that streams a single JSON event sequence over standard HTTP or an optional binary channel. These events—messages, tool calls, state patches, lifecycle signals—flow seamlessly between your agent backend and front-end interface, maintaining perfect real-time synchronization.

Get started in minutes using our TypeScript or Python SDK with any agent backend (OpenAI, Ollama, LangGraph, or custom code). Visit docs.ag-ui.com for the specification, quick-start guide, and interactive playground.

Today’s AI Agent ecosystem is maturing. Agents are going from interesting viral demos to actual production use, including by some of the biggest enterprises in the world.

However, the ecosystem has largely focused on backend automation, processes that run independently with limited user interaction. Workflows that are set off, or happen automatically, whose output is then used.

Common use-cases include data migration, research and summarization, form-filling, etc.

Repeatable and simple workflows, where accuracy can be ensured, or where 80% accuracy is good enough.

These have already been big productivity boosters. Automating time-consuming and tedious tasks.

Coding tools (Devin vs. Cursor)

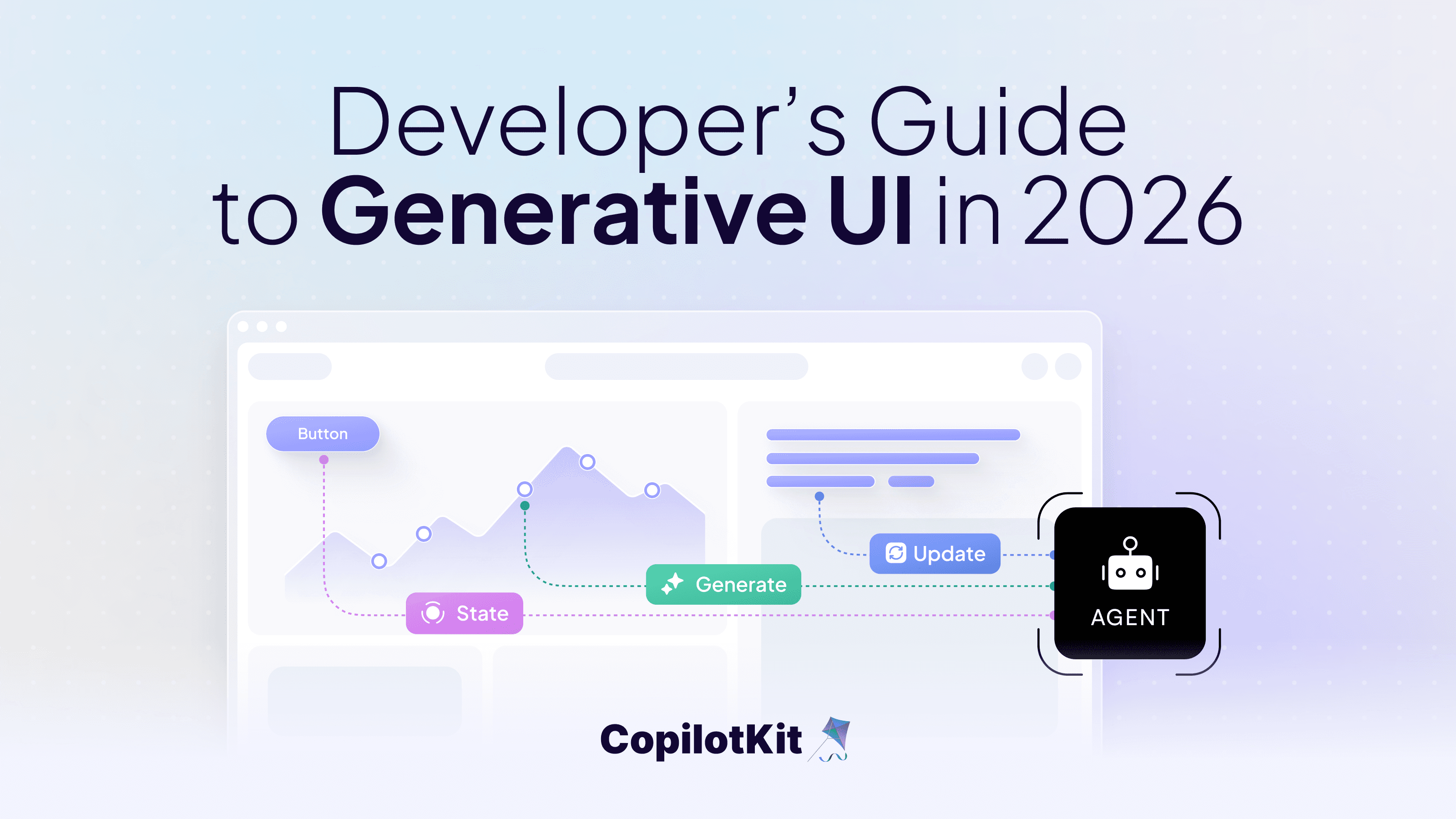

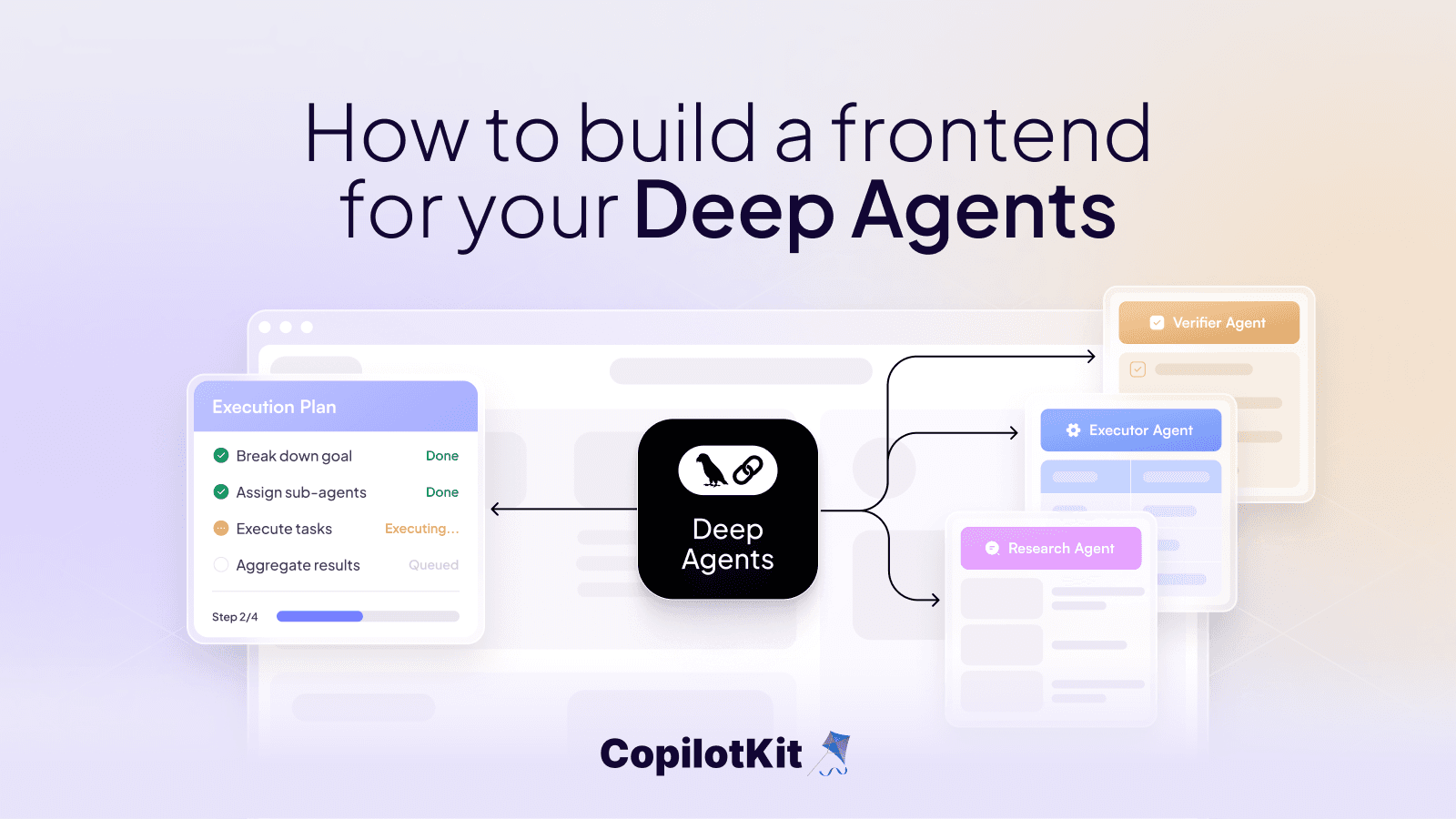

Throughout the adoption of generative AI, coding tools have been canaries in the coal mine, and Cursor is the best example of a user-interactive agent. An AI agent that works alongside users in a shared workspace.

This contrasts with Devin, which was the promise of a fully autonomous agent that automates high-level work.

For many of the most important use-cases, Agents are helpful if they can work alongside users. This means users can see what the agent is doing, can co-work on the same output, and easily iterate together in a shared workspace.

Creating these collaborative experiences presents significant technical challenges:

Your client makes a single POST to the agent endpoint, then listens to a unified event stream. Each event has a type(e.g., TEXT_MESSAGE_CONTENT, TOOL_CALL_START, STATE_DELTA) and minimal payload. Agents emit events as they occur, and UIs respond appropriately—displaying partial text, rendering visualizations when tools complete, or updating interfaces when state changes.

Built on standard HTTP, AG-UI integrates smoothly with existing infrastructure while offering an optional binary serializer for performance-critical applications.

AG-UI establishes a consistent contract between agents and interfaces, eliminating the need for custom WebSocket formats and text-parsing hacks. With this unified protocol:

AG-UI isn't just a technical specification—it's the foundation for the next generation of AI-enhanced applications that enable seamless collaboration between humans and agents.

→ Book a call and connect with our team

Please include who you are, what you're building, and your company size in the meeting description, and we'll help you get started today!

We'd love to get your feedback. Please join our AG-UI Discord Community and join the conversation.

Start building today at docs.ag-ui.com

Subscribe to our blog and get updates on CopilotKit in your inbox.